Probability

Table of Contents

Probability has become the default model for apparently random phenomena. Given a random experiment a probability measure is a population quantity that summarizes the randomness. When we talk about probability we are not refering to the observations (the data collected), rather we refer to a conceptual property of the population that we would like to estimate.

Kolmogorov’s axioms

Let

- Non-negativity

The probability of an event is a non-negative number:

- Normalization

This is the assumption of unit measure: that the probability that at least one of the elementary events in the entire sample space will occur is 1.

- Countable additivity

This is the assumption of sigma-addtivity:

Consequences

Monotonicity

IfThe Probability of an empty set

- The complement rule

- The Numeric Bound

It immediatly folows from the monotonicity property that

Further Consequences

- The Additional Law of Probability or The Sum Rule

Example 1.1

Suppose you rolled the fair die twice in succession. What is the probability of rolling two 4’s?

Answer:

Suppose you rolled the fair die twice. What is the probability of rolling the same number two times in a row?

Answer:

Since we don’t care what the outcome of the first roll is, its probability is

Probability Mass Functions and Densities

Probability mass and density functions are a way to mathematically characterize the population.

For all kinds of random variables (discrete or continuous) 1, we need a convenient mathematical function to model the probabilities of collections of realizations. These functions, called mass functions and densities, take possible values of the random variables, and assign the associated probabilities; they describe the population of interest.

So, consider the most famous density, the normal distribution. Saying that body mass indices follow a normal distribution is a statement about the population of interest. The goal is to use our data to figure out things about that normal distribution, where it’s centered, how spread out it is and even whether our assumption of normality is warranted!

A probability mass function (PMF) is a function that gives the probability that a discrete random variable is exactly equal to some value. Sometimes it is also known as the discrete density function. The probability mass function is often the primary means of defining a discrete probability distribution, and such functions exist for either scalar 2 or multivariate random variables 3 whose domain is discrete.

A probability mass function differs from a probability density function (PDF) in that the latter is associated with continuous rather than discrete random variables. A PDF must be integrated over an interval to yield a probability.

PMF Formal Definition

Notation: an upper case

to denote a random, unrealized, variable and a lowercase to denote an observed number.

The function

The probabilities associated with each possible values must be positive and sum up to 1. For all other values, the probabilities need to be 0.

Example 1.2

For instance, suppose we have a coin which may or may not be fair. Let

PDF Formal Definition

For the case when

We capture the notion of being close to a number with a probability density function which is often denoted by

Assuming

In general, to determine the probability that

For example, if

For a function

Cumulative and Survival Functions

The cumulative distribution function (CDF) of a random variable

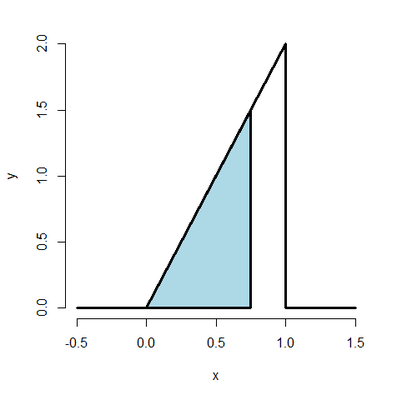

When the random variable is continuous, the PDF is the derivative of the CDF. So integrating the PDF (the line represented by the diagonal) yields the CDF. When you evaluate the CDF at the limits of integration the result is an area.

The survivor function

Example 1.3

Suppose that the proportion of help calls that get addressed in a random day by a help line is given by

What is the probability that 75% or fewer of calls get addressed?

x <- c(-0.5, 0, 1, 1, 1.5)

y <- c( 0, 0, 2, 0, 0)

plot(x, y, lwd = 3, frame = FALSE, type = "l")

polygon(c(0, .75, .75, 0), c(0, 0, 1.5, 0), lwd = 3, col = "lightblue")

From the CDF

.75*.75

0.5625

f <- function(x){2*x}

integrate(f, 0, .75)

0.5625 with absolute error < 6.2e-15

pbeta(.75, 2, 1)

0.5625

Answer: the probability that

Quantiles

By Cumulative Distribution Function (CDF), we denote the function that returns probabilities of

This function takes as input

The inverse of the CDF (or quantile function) tells you what

The

The

qbeta(.75, 2, 1)

0.8660254

From Example 1.3, we foud that the probability that

qbeta(.5625, 2, 1)

0.75

A percentile is simply a quantile with

Conditional probability

Conditional probability is a very intuitive idea, “What is the probability given partial information about what has occurred?”

We can formalize the definition of conditional probability so that the mathematics matches our intuition.

Let

If

Random variables are said to be

Bayes´ Rule

Bayes’ rule allows us to reverse the conditioning set provided that we know some marginal probabilities.

Formally Bayes’ rule is:

A discrete (random) variable assumes only values in a discrete (finite or countable) set, such as the integers. Mass functions will assign probabilities that they take specific values. Continuous (random) variable can conceptually take any value on the real line or some subset of the real line and we talk about the probability that they lie within some range. Densities will characterize these probabilities. ↩︎

A scalar is an element of a field which is used to define a vector space. In linear algebra, real numbers or other elements of a field are called scalars. ↩︎

More formally, a multivariate random variable is a column vector